The efficacy of periodontal regenerative therapies, more challenges with network meta-analysis

Guidelines

Powered Air-Purifying Respirator (PAPR) use for prevention of highly infectious viral disease in healthcare workers

There has been a considerable amount of literature developed over the Coronavirus CoV-2 pandemic regarding the dental profession, aerosol generating procedures (AGP), airborne spread of the virus and the need for enhanced personal protective equipment (PPE).Though there is evidence that dental AGPs cause significant environmental contamination there is very little evidence linking this to direct infection of staff or patients (HPS, 2020).

To reduce the potential risk of infection via the airborne route most national and international regulations (Clarkson et al., 2020) require that dental teams wear Face Filtering Piece level respiratory protection (FFP2/FFP3) in addition to standard PPE when performing AGPs. Following the SARS-Cov-1 outbreak in Toronto, Canada, powered air-purifying respirators (PAPRs) superseded the use of FFP respirators in the respiratory PPE protocols (Peng et al., 2003) however there is no mention of their existence as an alternative to FFP3 respirator in the PPE guidance from the Office of the Chief Dental Officer (England) (OCDO, 2020). This review is important due to limited information available regarding the practical efficacy between FFP2/FFP3 and PAPRs in the clinical environment.

Methods

This systematic review was reported according to the standards for the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA), and the protocol was prospectively registered with the International Register of Systematic Reviews (PROSPERO). Searches were undertaken using the electronic databases: Medline; Embase; Cochrane Library (Cochrane Database of Systematic Reviews and CENTRAL). Grey literature was sought through: Google Scholar, OpenGrey, and GreyNet. Searches were repeated up to June 2020, and only English language studies were included.

Studies were screened by two reviewers. Risk of bias in randomized controlled studies was assessed using the Cochrane Risk of Bias tool, and the ROBINS-I (Risk of Bias in Non-randomized Studies of Interventions) tool to assess the risk of bias in non-randomized studies. Quality of evidence was classified according to Grading of Recommendations, Assessment, Development and Evaluation (GRADE). Due to the heterogeneity of the small number of studies a narrative review was undertaken.

Results

- 10 studies fulfilled the inclusion criteria out of a total of 690.

- There was no difference in the primary endpoint of COVID-19 infection in respective observational studies in the airway proceduralists utilizing PAPR versus other protective respiratory equipment.

- Healthcare workers reported Improved comfort with regards to heat tolerance and visibility with PAPR technology.

- Decreased effort was needed to maintain the work of breathing compared to conventional filtering face pieces.

- Lower contamination rates of skin and clothing in simulation studies of participants utilising PAPRs.

- Decrease in audibility and communication difficulties due to increased weight of the equipment and noise generated by positive airflow.

Conclusions

The authors concluded: –

Field observational studies do not indicate a difference in healthcare worker infection utilizing PAPR devices versus other compliant respiratory equipment. Greater heat tolerance accompanied by lower scores of mobility and audibility in PAPR were identified. Further pragmatic studies are needed in order to delineate actual effectiveness and provider satisfaction with PAPR technology.

Comments

This was a well conducted systematic review considering the limitation in the number of studies. Due to the global nature of the pandemic more papers could possibly have been identified if the authors had not restricted the language search. There would appear to be numerous advantages identified in this review regarding the use of PAPRs such as increased assigned protection factors (APF >25), inbuilt eye protection, reusable, they don’t need fit testing, improved heat tolerance and comfort if worn for longer than 1 hour, and they can be worn without limiting facial hair.

Limitations include challenges in verbal communication due to the hum of the motor, maintenance and battery life, decontamination after use, and cost. Given the lack of demonstrable advantage in terms of infection prevention, institutional decision makers may be applying a pragmatic choice to use FFP3 over PAPR respirators, however there may be considerable physical and financial advantage for health care workers and dental staff who are required to work for extended periods.

Links

Primary Paper

Use of Powered Air-Purifying Respirator (PAPR) by healthcare workers for preventing highly infectious viral diseases -a systematic review of evidence. Ana Licina, Andrew J Silvers, Rhonda Stuart. medRxiv 2020.07.14.20153288;

Other references

CLARKSON, J., RICHARDS, D. & RAMSAY, C. 2020. Aerosol Generating Procedures and their Mitigation in International Dental Guidance Documents – A Rapid Review.

OCDO. 2020. Standard operating procedure Transition to recovery first published 4th June.[Online]. Accessed 1st Aug 2020].

PENG, P. W., WONG, D. T., BEVAN, D. & GARDAM, M. 2003. Infection control and anesthesia: lessons learned from the Toronto SARS outbreak. Canadian Journal of Anesthesia, 50, 989-997.

Aerosol generating procedures (AGPs) and their mitigation in international guidelines: Fallow time

Link to Dental Elf

Bottom line

The recommendations for fallow time in dental practice following an AGP appears almost random in its application across half of international guidelines. Its basic idea appears to be centred on a single precautionary principle based on simulation studies that are only weakly supported by evidence. Policy makers will need to take a more balanced approach when assessing the benefits and harms fallow time creates.

Introduction

With the publication of, ‘Aerosol Generating Procedures and their Mitigation in International Dental Guidance Documents – A Rapid Review’ by the COVID-19 Dental Services Evidence Review (CoDER) Working Group (Clarkson et al., 2020) it was interesting to note their finding regarding fallow time for non Covid-19 patients:

- 48% of the guidelines recommend having a fallow period.

- The amount of time recommended varied (2-180 mins) between guidelines and also within guidelines, depending on environmental mitigation.

- None of the fallow period recommendations referenced any scientific evidence.

The fallow period is the ‘time necessary for clearance of infectious aerosols after a procedure before decontamination of the surgery can begin’ (FGDP, 2020), and this has caused considerable discussion/stress amongst the dentist returning to practice after lockdown in the UK (BAPD, 2020, Heffernan, 2020).

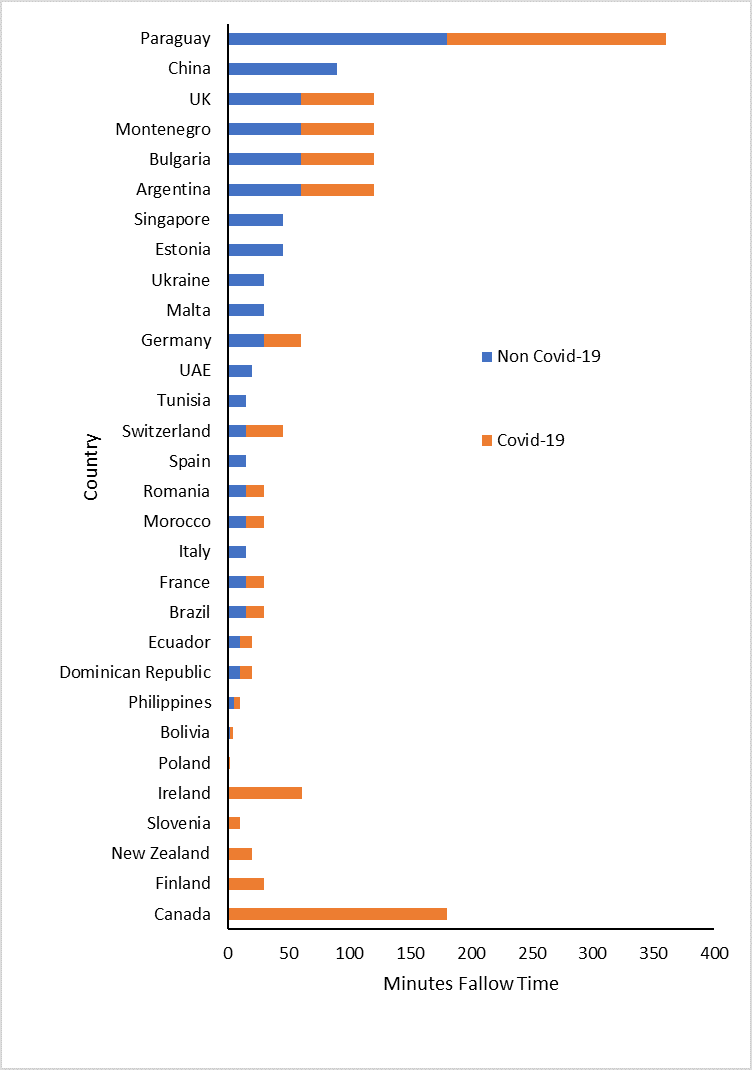

The fallow times following an AGP for both Covid-19 negative patients and Covid-19 positive patients are presented in Figure 1 and Table 2 using the data from the CoDER rapid review:

Figure 1. Stacked bar chart representing fallow times for both Covid negative and positive patients

Since the fallow times are not normally distributed the median value was utilised and the 95% confidence limit approximated according to Hill (Hill, 1987).

Table 1. Median fallow time

| Aerosol generating procedure | Median Fallow time (mins) |

| Non Covid-19 patient | 15 (95% CI: 15 to 30) |

| Covid-19 positive patient | 20 (95% CI: 10 to 60) |

Much of the theory around the need for fallow time is based on the aerosol transmission of the Coronavirus SARS-CoV-2 and the need to allow the aerosol to settle or be physically removed from the room via ventilation or filtration. Initially the WHO supported the idea that spread was predominantly caused by large droplets and contact (WHO, 2020a) but under increased lobbying from the scientific community to include airborne transmission as a significant factor (Morawska and Milton, 2020) the WHO amended their position (WHO, 2020b) on the 9th July.

The evidence for airborne transmission of Coronavirus SARS-CoV-2 is uncertain and mostly based on mathematical modelling (Buonanno et al., 2020) but where there has been limited observational data Hota and co-workers concluded that the virus was not well transmitted by the airborne route compared to measles, SARS-1 or Tuberculosis (Hota et al., 2020).

The reason there is no strong scientific evidence for fallow time and airborne transmission is possibly because it is based on two conceptual arguments:

The Precautionary Principle (PP)

The precautionary principle (PP) states that if an action or policy has a suspected risk of causing severe harm to the public domain (affecting general health or the environment globally), the action should not be taken in the absence of scientific near-certainty about its safety. Under these conditions, the burden of proof about absence of harm falls on those proposing an action, not those opposing it (Taleb et al., 2020). The confusion at the moment is that the PP has been reversed and the action is evidence-based ‘normal/enhanced PPE and cross infection policy’ rather than the imposition of untested application of ‘fallow time’ in general practice.

The Independent Action Hypothesis (IAH)

The IAH states that each virion has an equal, non-zero probability of causing a fatal infection especially where airborne spread via small droplets (5μm) is the proposed method of transmission (Stadnytskyi et al., 2020). The reality of the IAH is that evidence supporting this theory is oversimplified and indirect, based mainly on small sample studies of moth larvae, and tobacco mosaic virus (Zwart et al., 2009, Cornforth et al., 2015).

Discussion

The main problem for anyone challenging the PP and IAH is having to prove a ‘near certainty of safety’ when there are many confounding factors in play, and the application of a ‘non-zero’ probability of a single inhaled virus causing death results in an ill-defined probability of risk (Taleb et al., 2020). Unfortunately, once the PP was been invoked designing challenge studies to create the scientific proof that the action is safe can be unethical in humans or experimentally impossible due to the effect of low viral prevalence in the community on statistical power.

One solution may be to rapidly assess the retrospective infection rates associated with the provision of dental care in those countries with extended fallow times and compare then to countries of similar demographics that do not have fallow time (Table 1). Policy makers may need to revise their current interpretation of the precautionary principle and IAH based on the fact that in a pandemic situation there may be multiple interacting factors that can cause significantly more second and third order harm to the public domain than the virus itself.

Table 2. Countries with/without(bold) fallow time. ND – no data

| Country | Non Covid-19 | Covid-19 | Country | Non Covid-19 | Covid-19 | |

| Ireland | 0 | 60 | Burkina Faso | ND | ND | |

| Poland | 0 | 0 | Canada | ND | 180 | |

| Bolivia | 2 | 2 | Chile | ND | ND | |

| Philippines | 5 | 5 | Columbia | ND | ND | |

| Dominican Republic | 10 | 10 | Costa Rica | ND | ND | |

| Ecuador | 10 | 10 | Croatia | ND | ND | |

| Brazil | 15 | 15 | Denmark | ND | ND | |

| France | 15 | 15 | Finland | ND | 30 | |

| Italy | 15 | ND | Greece | ND | ND | |

| Morocco | 15 | 15 | Guatemala | ND | ND | |

| Romania | 15 | 15 | Honduras | ND | ND | |

| Spain | 15 | ND | India | ND | ND | |

| Switzerland | 15 | 30 | Kenya | ND | ND | |

| Tunisia | 15 | ND | Kosovo | ND | ND | |

| UAE | 20 | ND | Malaysia | ND | ND | |

| Germany | 30 | 30 | Mexico | ND | ND | |

| Malta | 30 | ND | Mozambique | ND | ND | |

| Ukraine | 30 | ND | Myanmar | ND | ND | |

| Estonia | 45 | ND | Netherlands | ND | ND | |

| Singapore | 45 | ND | New Zealand | ND | 20 | |

| Argentina | 60 | 60 | Norway | ND | ND | |

| Bulgaria | 60 | 60 | Panama | ND | ND | |

| Montenegro | 60 | 60 | Peru | ND | ND | |

| UK | 60 | 60 | Portugal | ND | ND | |

| UK – NI | 60 | 60 | Slovakia | ND | ND | |

| UK – Wales | 60 | 60 | Slovenia | ND | 10 | |

| China | 90 | ND | South Africa | ND | ND | |

| Paraguay | 180 | 180 | UK – Scotland | ND | ND | |

| Australia | ND | ND | Uruguay | ND | ND | |

| Austria | ND | ND | USA | ND | ND | |

| Belgium | ND | ND | Zimbabwe | ND | ND |

References

BAPD. 2020. BAPD urges government to reconsider PPE requirements for dentistry [Online]. Dentistry. [Accessed 30th July 2020 ].

BUONANNO, G., STABILE, L. & MORAWSKA, L. 2020. Estimation of airborne viral emission: Quanta emission rate of SARS-CoV-2 for infection risk assessment. Environ Int, 141, 105794.

CLARKSON J, RAMSAY C, RICHARDS D, ROBERTSON C, & ACEVES-MARTINS M; on behalf of the CoDER Working Group (2020). Aerosol Generating Procedures and their Mitigation in International Dental Guidance Documents – A Rapid Review.

CORNFORTH, D. M., MATTHEWS, A., BROWN, S. P. & RAYMOND, B. 2015. Bacterial Cooperation Causes Systematic Errors in Pathogen Risk Assessment due to the Failure of the Independent Action Hypothesis. PLoS Pathog, 11, e1004775.

FGDP. 2020. Implications of COVID-19 for the safe management of general dental practice A practical guide [Online]. [Accessed 30th July 2020 ].

HEFFERNAN, M. 2020. Is a one hour fallow period really necessary for dentistry in England? [Online]. Dentistry. [Accessed 30th July 2020 ].

HILL, I. 1987. 95% Confidence limits for the median. Journal of Statistical Computation and Simulation, 28, 80-81.

HOTA, B., STEIN, B., LIN, M., TOMICH, A., SEGRETI, J. & WEINSTEIN, R. A. 2020. Estimate of airborne transmission of SARS-CoV-2 using real time tracking of health care workers.

MORAWSKA, L. & MILTON, D. K. 2020. It is time to address airborne transmission of COVID-19. Clin Infect Dis, 6,ciaa939.

STADNYTSKYI, V., BAX, C. E., BAX, A. & ANFINRUD, P. 2020. The airborne lifetime of small speech droplets and their potential importance in SARS-CoV-2 transmission. Proc Natl Acad Sci U S A, 117, 11875-11877.

TALEB, N., READ, R. & DOUADY, R. 2020. The Precautionary Principle (with Application to the Genetic Modification of Organisms).

WHO 2020a. Modes of transmission of virus causing COVID-19: implications for IPC precaution recommendations: scientific brief, 27 March 2020. World Health Organization.

WHO 2020b. Transmission of SARS-CoV-2: implications for infection prevention precautions 9th July. World Health Organization.

ZWART, M. P., HEMERIK, L., CORY, J. S., DE VISSER, J. A., BIANCHI, F. J., VAN OERS, M. M., VLAK, J. M., HOEKSTRA, R. F. & VAN DER WERF, W. 2009. An experimental test of the independent action hypothesis in virus-insect pathosystems. Proc Biol Sci, 276, 2233-42.